In the fast-evolving panorama of artificial cleverness (AI) and software development, the importance of quality assurance can not be overstated. As AI models are usually increasingly deployed to build code, ensuring typically the accuracy, efficiency, and even reliability of these kinds of code outputs will become crucial. Continuous testing emerges as the vital practice within this context, enjoying a pivotal role in maintaining the integrity and performance of AI-generated signal. This article delves into the concept of continuous testing in AI computer code generation, exploring the significance, methodologies, difficulties, and guidelines.

Exactly what is Continuous Assessment?

Continuous testing refers to the process of carrying out automated tests through the software development lifecycle to ensure the software is always within a releasable state. Unlike traditional testing, which often occurs with specific stages regarding development, continuous assessment integrates testing actions into every stage, from coding and even integration to deployment and maintenance. This technique allows for instant feedback on code changes, facilitating quick identification and image resolution of issues.

Need for Continuous Testing inside AI Code Technology

AI code technology involves using machine learning models to be able to automatically produce computer code based on given inputs. While this specific process can substantially speed up growth and reduce manual coding errors, it introduces a new set of issues. Continuous testing is important for several factors:

Accuracy and Finely-detailed: AI-generated code should be accurate and even meet the specific requirements. Continuous assessment ensures that the code functions as intended and adheres for the desired reasoning and structure.

Top quality Assurance: With constant testing, developers could maintain high criteria of code quality by identifying plus addressing defects early on in the growth process.

Scalability: Since AI models and even codebases grow, ongoing testing provides the scalable solution to manage the improving complexity and amount of code.

The use and Compatibility: Continuous testing helps guarantee that AI-generated code integrates seamlessly with existing systems and is compatible with different environments and platforms.

Security: Automated tests can detect security vulnerabilities in the created code, reducing the particular risk of fermage and enhancing typically the overall security position of the software program.

Methodologies for Ongoing Testing in AJE Code Generation

Applying continuous testing within AI code era involves several strategies and practices:

Automated Unit Testing: Unit tests focus on specific components or capabilities with the generated program code. Automated unit testing validate that each component of the signal works correctly inside isolation, ensuring of which the AI unit produces accurate and reliable outputs.

Incorporation Testing: Integration checks evaluate how a produced code interacts with additional system components. This specific testing ensures that will the code integrates seamlessly and features correctly within typically the broader application environment.

End-to-End Testing: End-to-end tests simulate actual scenarios to confirm the complete functionality of the produced code. These tests verify that typically the code meets user requirements and works as expected throughout production-like environments.

Regression Testing: Regression testing are crucial regarding making sure new signal changes do not necessarily introduce unintended aspect effects or break up existing functionality. Computerized regression tests manage continuously to confirm that the generated code remains stable and reliable.

Functionality Testing: Performance checks evaluate the efficiency in addition to scalability of typically the generated code. These kinds of tests assess reaction times, resource utilization, and throughput to ensure that the code executes optimally under different conditions.

Security Assessment: Security tests recognize vulnerabilities and weak points in the produced code. Automated safety testing tools can easily scan for common safety issues, such while injection attacks in addition to unauthorized access, aiding to safeguard the software against potential dangers.

Challenges in Constant Testing for AJE Code Generation

When continuous testing presents numerous benefits, moreover it presents several problems in the framework of AI code generation:

Test Insurance coverage: Ensuring comprehensive check coverage for AI-generated code can become challenging due to the powerful and evolving characteristics of AI types. Identifying and addressing edge cases in addition to rare scenarios calls for careful planning and extensive testing.

go to this web-site out Maintenance: As AJE models and codebases evolve, maintaining and even updating automated tests can be resource-intensive. Continuous testing demands ongoing efforts to keep tests relevant and even effective.

Performance Over head: Running automated testing continuously can bring in performance overhead, specifically for large codebases in addition to complex AI types. Balancing the require for thorough screening with system performance is essential.

Data Quality: The top quality of training files used to create AI models immediately impacts the good quality of generated signal. Ensuring high-quality, rep, and unbiased info is critical with regard to effective continuous screening.

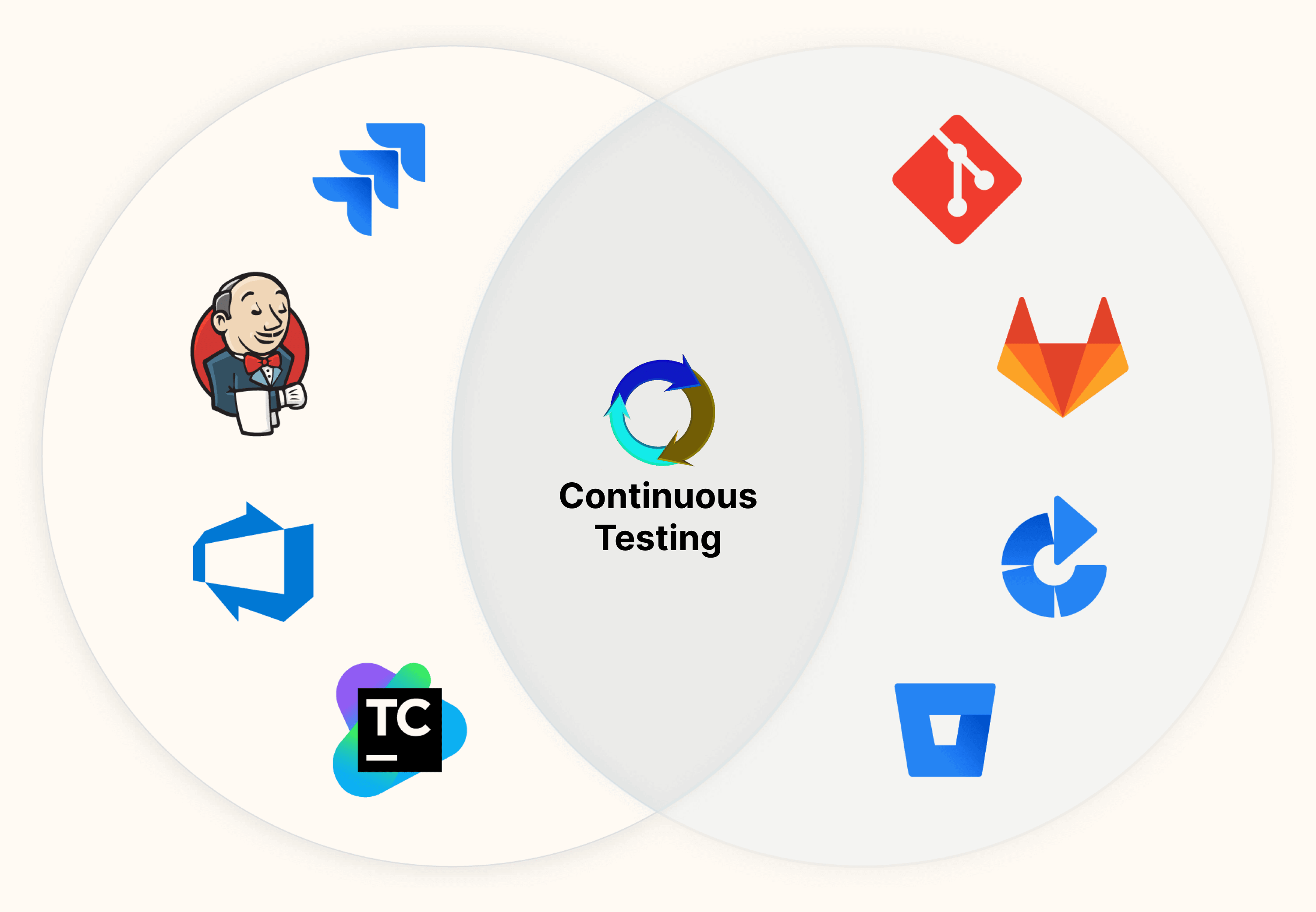

Integration Complexity: Including continuous testing resources and frameworks using AI development sewerlines can be complex. Ensuring seamless the use and coordination in between various tools and even processes is important for successful continuous testing.

Best Practices regarding Continuous Testing throughout AI Code Generation

To overcome these kinds of challenges and increase the effectiveness involving continuous testing in AI code era, think about the following greatest practices:

Comprehensive Check Planning: Build a powerful test plan of which outlines testing objectives, methodologies, and insurance coverage criteria. Incorporate a mixture of unit, integration, end-to-end, regression, efficiency, and security checks to ensure thorough validation.

Automation Very first Approach: Prioritize automation to streamline assessment processes and lessen manual effort. Leverage automated testing frameworks and tools in order to achieve consistent and even efficient test performance.

Incremental Testing: Follow an incremental testing approach, where testing are added and updated iteratively because the AI model and codebase evolve. This method ensures that testing remain relevant plus effective throughout typically the development lifecycle.

Ongoing Monitoring: Implement continuous monitoring and reporting to track check results, identify trends, and detect flaws. Use monitoring resources to gain ideas into test functionality and identify regions for improvement.

Collaboration and Communication: Create collaboration and interaction between development, screening, and operations clubs. Establish clear channels for feedback plus issue resolution to ensure timely id and resolution of defects.

Quality Data: Invest in premium quality training data in order that the accuracy and trustworthiness of AI types. Regularly update and even validate training information to maintain design performance and code quality.

Scalable Facilities: Utilize scalable assessment infrastructure and cloud-based resources to manage the demands of continuous testing. Make sure that the testing atmosphere can accommodate the growing complexity and amount of AI-generated computer code.

Conclusion

Continuous tests is really a cornerstone of quality assurance in AJE code generation, offering a systematic strategy to validating in addition to maintaining the ethics of AI-generated code. By integrating screening activities throughout the particular development lifecycle, organizations can ensure the accuracy and reliability, reliability, and safety measures of these AI versions and code outputs. While continuous testing presents challenges, implementing best practices and leveraging automation can help overcome these obstacles and achieve successful implementation. As AJE continues to convert software development, ongoing testing will enjoy a progressively critical part in delivering superior quality, dependable AI-generated computer code.

Deja una respuesta